This morning I opened up my twitter account and the “while you were away” feature had an interesting tweet highlighted. It was from the National Observer which reports itself to be:

“a new publication founded by the Vancouver Observer’s award-winning team of journalists. The National Observer focuses on news through the lens of energy, environment and federal politics.”

The tweet said:

#Oilsands as toxic as peanut butter? That’s what govt’s PR campaigns say: T.Berman http://bit.ly/natobstzp1 @NoTarSands pic.twitter.com/kGzJSvQ9kr

Needless to say I was intrigued: the government was paying a PR company to lie about the toxicity of oil sands, which we all know are very toxic? and so I clicked on the link which brought me to story at the National Observer called: Time for honest talk and messy solutions in the oil sands and authored by a very respected name in the environmental industry: Tzeporah Berman. I have been following Dr. Berman’s career since the 1990’s and when she speaks it carries a lot of weight. Her National Observer blogger page describes her as:

Tzeporah Berman BA, MES, LLD (honoris causa), Adjunct Professor York University Faculty of Environmental Studies is author of This Crazy Time: Living Our Environmental Challenge, Knopf Canada.

The combination of the tweet and the author made me even more interested and so I started reading the story and there was the claim right there in the first paragraph of the story:

The debate over energy, oil sands and pipelines in Canada is at best dysfunctional and at worst a twisted game that is making public relations professionals and consultants on all sides enormous amounts of money. Documents obtained through Freedom of Information routinely show our own government hiding scientific reports, meeting secretly to craft PR strategies and even policy with the companies they are supposed to regulate and millions of dollars are spent on ads trying to convince Canadians that the oil sands are as toxic as peanut butter [emphasis mine] and that without them our hospitals will close.

Unfortunately, the story didn’t contain any references to the source of the claim that “millions of dollars are spent on ads trying to convince Canadians that the oil sands are as toxic as peanut butter“. Now as regular readers of my blog know, I have written a lot about both the topics of toxicology and oil sands so I was very interested in this claim because if an ad campaign has said something of the sort, I would have expected to have noticed it, especially if they spent millions of dollars on the campaign. I did a cursory web search and could find nothing on the topic. So I decided to tweet back to the Observer and asked a quick question:

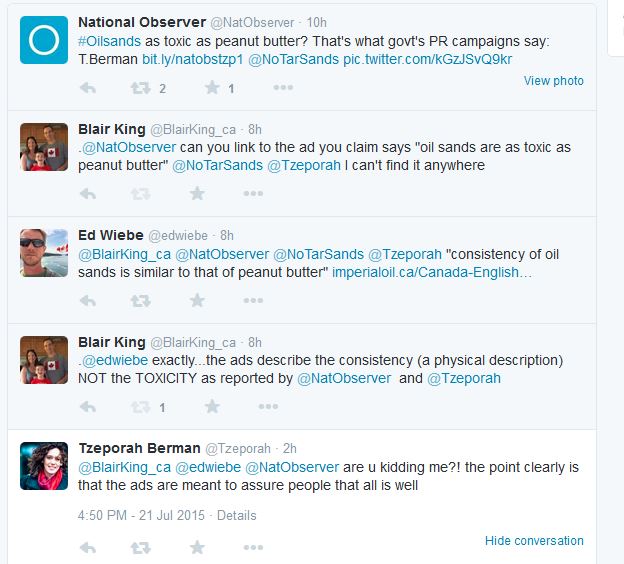

.@NatObserver can you link to the ad you claim says “oil sands are as toxic as peanut butter” @NoTarSands @Tzeporah I can’t find it anywhere

While waiting I re-read the article and noticed that it was a re-print of a commentary printed in the Toronto Star. So I went to the Star’s web site and found the commentary article titled: It’s time to talk about the oilsands. The article contained an almost identical paragraph:

Documents obtained through Freedom of Information routinely show our own government hiding scientific reports or meeting secretly to craft PR strategies with the companies they are supposed to regulate, while millions of dollars are spent on ads trying to convince Canadians that the oilsands are as toxic as peanut butter[emphasis mine].

Happily the article contained an embedded link to another National Observer story: Harper Conservatives’ secret tactics to protect oil sands: FOI details which contained a lot of links to Freedom of Information (FOI) results. I scanned the released FOI documents and was unable to find the information so I decided to try a different approach. I did a Google search for the words “oil sands” “toxic” and “peanut butter” and was only partially successful. I did find a useful link except it was to a story in The Tyee: Gooey Oil Sands Lies PR Flacks Tell: Call BS! The article described a plan to compare the viscosity of bitumen to peanut butter. The critical line from the story was:

One cheery communication compared the viscosity of bitumen, an ultra heavy crude, to peanut butter [emphasis mine]. Bitumen definitely looks, feels and behaves like asphalt but it sure as hell doesn’t taste like peanut butter.

Using this information, I did another search and found many hits where the viscosity (consistency to the non-chemist) of oil sands and bitumen were compared to peanut butter. Now this made a lot of sense to me. Most people don’t understand what bitumen is like in real life and a PR program to make it sound more friendly by comparing its viscosity and consistency to peanut butter might both allows readers to gain some understanding of the substance and maybe get some positive spin.

The problem with this discovery is that it was utterly harmless and completely inconsistent with the Berman articles. The PR campaign was designed to provide factually correct information: that bitumen has a similar consistency to peanut butter. It certainly did not include any suggestion that they would try and convince the public that oil sands were “as toxic as peanut butter”. Now some of you might call me a bit of a pedant but let’s put this into perspective. We know as a matter of fact that oil sands are very toxic and should not be ingested. If the government was spending “millions” to try and convince us that oil sands were not toxic that would be a fantastically important story. Governments aren’t supposed to lie to the public and when they are caught doing so, they need to be held to account. So a headline saying that the government was spending “millions of dollars” to trying to “convince Canadians that the oilsands are as toxic as peanut butter” which they know is an outright lie, well that would be an important story as well as the first question in Question Period the next day.

My natural impression was that this line represented a simple mistake, a typo, some bad typesetting and so I politely contacted the National Observer which tweeted back the following:

@martynschmoll @BlairKing_ca @edwiebe This is an op-ed and, indeed, the phrase is a metaphor on the first paragraph http://bit.ly/natobstzp1

Now remember this was in the same discussion thread that started with a tweet where the National Observer declared: “#Oilsands as toxic as peanut butter? That’s what govt’s PR campaigns say”. Even if the original use in the story was as a metaphor, the tweet, by the National Observer, was anything but: it was a statement of fact. In this case however, it is equally clear that in the form presented in the Op-Ed it was not presented as a metaphor. I’m not sure how the sentence: “millions of dollars are spent on ads trying to convince Canadians that the oil sands are as toxic as peanut butter” can be read as anything but a statement of fact. A further concern was the fact that a National news service has editors who cannot distinguish between a metaphor and a direct statement of fact? Also there is a second subtext to their tweet. Essentially they are saying that since it is an Op-Ed the article doesn’t have to be factual? I thought news services were supposed to correct errors, not promulgate them.

Having received that disappointing response I tried Dr. Berman. When I contacted Dr. Berman her response was:

@BlairKing_ca @edwiebe @NatObserver are u kidding me?! the point clearly is that the ads are meant to assure people that all is well.

Now remember, Dr. Berman notes on every platform I have been able to locate that she is an “Adjunct Professor York University Faculty of Environmental Studies.” When an academic is shown to have made an error of fact, the typical approach is to quickly correct the error and thank the person who pointed it out, not double-down on the proposition? Dr. Berman may feel that the point of her various articles was to indicate that the “ads were meant to assure people that all is well”, but in the process she made a statement of fact (millions of dollars are spent on ads trying to convince Canadians that the oil sands are as toxic as peanut butter) that cannot be verified with the information provided. Admittedly she may have additional data confirming her statement but she certainly hasn’t presented it for review. As it stands she has made a statement of fact that the government was spending millions on a disinformation program? The documentation I have been able to find suggests that the government spent an undisclosed amount of money providing factually correct information with a government-friendly spin.

I have been asked by a couple people on-line about why I am once again banging on my drum? There is an oft misused term out there: “factoid”. Most people, when asked, think that it refers to a small interesting and (most importantly) true fact but factoid is actually defined as “an invented fact believed to be true because it appears in print”. Another closely related term was coined by Stephen Colbert: truthiness which is defined as “the quality of seeming to be true according to one’s intuition, opinion, or perception without regard to logic, factual evidence, or the like”. In the process of looking up information for this blog posting I found over a dozen different sources already repeating the factoid that millions of dollars are/were spent on ads trying to convince Canadians that the oil sands are as toxic as peanut butter. A few months ago I tracked down a similar piece of misinformation on the attribution of avian deaths to nuclear facilities that had been given time to fester. I wrote about it in a blog post (On estimates of avian deaths attributable to coal and nuclear facilities) where I showed how the data was clearly in error but I was too late, that misinformation now has a life of its own. It shows up in sources as varied as Wikipedia and US World and National Report. Maybe if someone had pointed out the issues with the information on the day it was released that factoid would not be the number one talking point on the topic to this day.

Whether you like our government or not, in this case, they appear to have not done what they are reported to have done. The factoid presented has a ring of truthiness that will appeal to their opponents and, as demonstrated, is already running rampant on the internet. The best way to fight a factoid is with facts. As I wrote earlier, I welcome anyone presenting a document which shows that the government was paying PR people to run a disinformation campaign on this topic, but that is not what I have uncovered in this case. The individuals responsible for promulgating this factoid have a responsibility, if they determine it to be incorrect, to correct the record. I look forward to their doing so shortly.

For those who like the visuals:

Author’s Notes:

2015-07-23 The National Observer has quietly adjusted their text to remove the words “as toxic as” and replace them with the word “like“. Believe it or not, that makes all the difference. The change does little to tone down the article but that minor change addresses all my concerns about spreading misinformation. While the change is not noted anywhere, it represents a great first start. Thank-you Dr. Berman.

2015-07-23: DeSmog blog has now fixed their post and included a correction notice. A great presentation of how a correction should be done to avoid what one commenter calls “zombie evidence”. Great work DeSmog!

Now only the Toronto Star has the incorrect data….